Aarna Networks Brings AI Cloud Orchestration from Cloud to the Edge

Aarna Networks Brings AI Cloud Orchestration from Cloud to the Edge

In AI cloud orchestration, a centralized console/controller translates business requirements into infrastructure, network, and application configuration commands that govern the behavior of the AI clouds, for instance, GPU/CPU hardware, IP and Infiniband switches, and storage.

While this concept of orchestration has existed in the CPU era with VMware and OpenStack, with the advent of AI Clouds, the concept of orchestration also evolved, with AI cloud orchestration automating the tasks needed to create fully isolated GPU instances on a GPU pool. And now, with the emergence of private 5G networks, IoT and AI, we have edge AI orchestration, where orchestration software is used to manage, automate, and coordinate the flow of resources between multiple types of devices along with machine learning applications. This next-generation technology allows AI cloud service providers and enterprises to make the most efficient use of their GPU resources.

A game changer for AI Cloud GPU-as-a-Service providers

Aarna Networks, a San Jose-based NVIDIA and venture-backed startup, is capitalizing on this shift. It offers orchestration and management software to enable GPU-as-a-Service AI Cloud vendors to get into production quickly without them having to develop this software in-house.

“We believe GenAI will unleash a new wave of applications that will be bigger than web/mobile. With 1,000s of AI application instances changing dynamically across 10,000s of edge and cloud sites, the management dilemma becomes 100,000x more complex than before,” says Amar Kapadia, Aarna Networks Co-Founder and CEO.

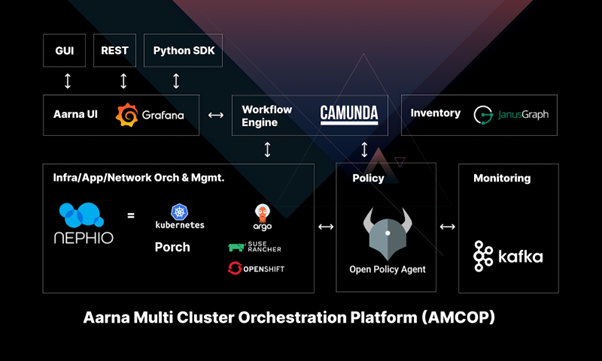

Amar and his co-founder Sriram Rupanagunta founded Aarna on the belief that an effective management solution can be a game-changer in allowing organizations to achieve strong ROI from their AI investments. The company’s open-source Aarna Multi Cluster Orchestration Platform (AMCOP) is a Kubernetes-based orchestration and management platform for GPU-as-a-Service AI Cloud and edge infrastructure. It is declarative intent based with constant reconciliation, massively scalable from edge to cloud, and offers support across multiple layers covering infra, CaaS, and network services.

“We take a full-stack view of the AI environment,” says Amar. “To us, the environment represents any underlying infrastructure, including compute, storage, and networking GPUs. And above that, are network services such as 5G and applications such as machine learning. Our platform orchestrates the entire stack and manages it over time.”

Aarna had its beginnings around 2020. The team had a rich open-source background, working with OpenStack and Kubernetes. They well understood the power of open source but saw a gap in the area of orchestration and management that no one was addressing.

Highly differentiated solutions and a GTM powered by NVIDIA investment

Aarna’s go-to-market strategy leverages the Cloud Native Computing Foundation and the Linux Foundation Networking (LF Networking, LFN) to bring its platform to developers around the world. Through these relationships, Aarna is using several projects to drive exposure of its platform to developers. The go-to-market has been further boosted through a recent investment into the company by NVIDIA.

The challenge with an open-source go-to-market strategy is always monetization. Inspired by Kubernetes, Aarna has adopted an opinionated distribution model where it mixes and matches various open-source projects to create products that are highly differentiated and difficult to duplicate. It then relies on a traditional software licensing and support model to drive revenue. Solution offerings include:

AI Cloud: GPU-as-a-Service a solution that allows data centers and GPU as a Service providers to build their own multi-tenant AI cloud;

AI-and-RAN on a GPU Cloud, which allows mobile network operators to run RAN and GPU cloud and dramatically lower 5G CAPEX by dynamically re-allocating the 70-80% of underutilized 5G radio area networks capacity to AI/ML workloads;

AI-and-Private 5G Orchestration and Management, which allows businesses operating private 5G networks the ability to gain control over their network to lower costs, increase margins and enable edge AI/ML applications; and, GPU Spot Instances, which allows GPU owners to offset and lower their operating costs by using Aarna to register and unregister their free NVIDIA GPU cycles as spot instances with aggregators.

While the company initially focused its offerings on edge enablement, the rapid growth in AI/ML has opened up new and exciting opportunities for Aarna’s orchestration technology, propelled by its relationship with NVIDIA.

“The investment from NVIDIA validates our strategy. They are the dominant player in the world of AI/ML today.” confesses Amar.

Aarna’s relationship with 5G Open Innovation Lab (5G OIL) began with an introduction from Kevin Ober, General Partner with 5G OIL overseeing the Lab’s rolling investment fund. After meeting Lab Managing Director Jim Brisimitzis, Amar and Aarna joined the Lab as part of its first start-up batch.

“We’ve benefitted from 5G OIL is several ways,” says Amar. “Jim and Kevin are so well connected and have a stellar reputation within the technology and investor world. That is brand equity we’ve been able to leverage. In addition, the Lab itself has been instrumental to us and our work in the private 5G and AI/ML realm. It provided us with the technology infrastructure to test out and prove our use cases.”

Most recently, that relationship has driven involvement by Aarna in Project Blueberry, the Lab’s private 5G network in-a-box solution. Explains Amar, “Blueberry has different variations. One recipe is Druid Software for 5G, Core Airspan for the RAN and Aarna Networks as the orchestrator. It’s been a perfect opportunity for us to work hand in hand with the Lab to demonstrate our capabilities and enterprise-prove our solutions together.”